Robots Visualizing Reality: The Future is Here!

Imagine a world where machines don’t just process data, but truly see and understand the complex, ever-changing visual landscape around them. This isn’t science fiction anymore; we are witnessing the dawn of an era where advanced robot visualizing phase capabilities are rapidly transforming how we interact with technology and the world. This groundbreaking leap allows robots to perceive, interpret, and react to their environment with an unprecedented level of sophistication, paving the way for innovations we’ve only dreamed of.

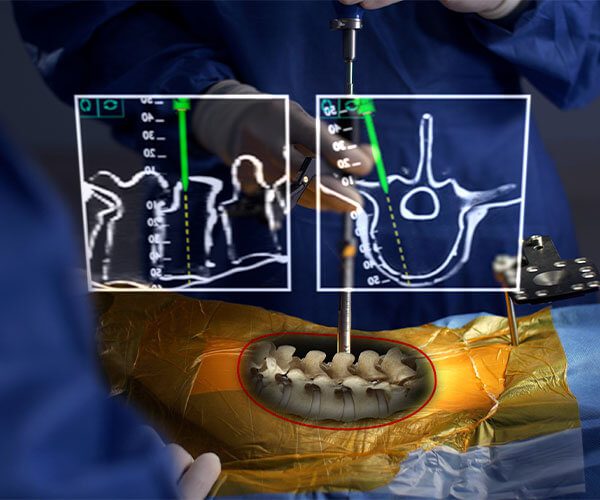

From self-driving cars navigating bustling city streets to surgical robots assisting in delicate operations, the ability for robots to accurately visualize and interpret their surroundings is paramount. This article dives deep into the exciting advancements, the underlying technologies, and the profound implications of this revolutionary phase in robotics.

The Evolution of Robot Vision: From Basic Sensors to Intelligent Perception

For decades, robots relied on rudimentary sensors to gather information. These might have been simple light sensors or basic proximity detectors. While functional for specific tasks, this level of perception was akin to a blind person feeling their way through a room. The true revolution began with the integration of cameras and sophisticated image processing techniques.

Early Robotics: The Age of Simple Data Input

In the early days of robotics, “vision” was often limited to detecting the presence or absence of objects. Think of industrial robots on assembly lines, programmed to pick up a specific part. Their “vision” was a pre-defined pattern match, lacking any real understanding of context or variation.

The Rise of Computer Vision

The advent of computer vision marked a significant turning point. Algorithms started to emerge that could process pixel data from cameras, enabling robots to identify shapes, colors, and even basic textures. This allowed for more flexible automation, where robots could adapt to slight variations in object placement.

Unveiling the “Visualizing Phase”: What It Means for Robots

The term “visualizing phase” in robotics refers to the sophisticated stage where a robot’s perception system moves beyond mere image recognition to a deeper, more contextual understanding of its visual input. It’s about building a dynamic, three-dimensional model of the world and understanding the relationships between objects within it.

Beyond 2D: Embracing Depth and Dimension

Traditional cameras capture a 2D image. However, the real world is three-dimensional. Advancements in stereo vision, LiDAR (Light Detection and Ranging), and structured light scanning allow robots to perceive depth, measure distances, and create detailed 3D maps of their surroundings. This is crucial for tasks requiring precise navigation and manipulation.

Semantic Understanding: What Objects *Are*

The real magic happens when robots can not only detect an object but also understand what it is. Thanks to deep learning and advanced AI models, robots can now identify a vast array of objects – a chair, a table, a person, a dog – and even understand their potential function or interaction. This semantic understanding is what truly elevates robot perception.

Real-time Processing and Adaptation

The visual world is constantly changing. Robots in the visualizing phase are equipped with powerful processors and efficient algorithms that allow them to process visual data in real-time. This means they can react instantly to dynamic events, such as a person walking in front of a robot or a sudden change in lighting conditions. This adaptability is key to safe and effective operation in complex environments.

Key Technologies Powering Robot Visualization

Several cutting-edge technologies are converging to enable this advanced visual understanding in robots. These are the building blocks that allow machines to “see” like never before.

Artificial Intelligence and Machine Learning

At the heart of advanced robot visualization lies AI, particularly machine learning. Deep neural networks, trained on massive datasets of images and videos, are the engines that drive object recognition, scene understanding, and predictive analysis. These models learn to identify patterns and features that even humans might overlook.

Sensors: The Eyes of the Robot

A variety of sensors contribute to a robot’s visual perception:

- Cameras (RGB, Depth, Thermal): Standard cameras provide color and detail. Depth cameras (like Intel RealSense or Azure Kinect) capture distance information, creating 3D point clouds. Thermal cameras detect heat signatures, useful in low-light or for identifying specific objects.

- LiDAR: Emits laser pulses to measure distances and create highly accurate 3D maps, essential for autonomous navigation.

- IMUs (Inertial Measurement Units): While not directly visual, IMUs provide motion and orientation data, which is crucial for fusing with visual data to create a complete picture of the robot’s state and its environment.

Simultaneous Localization and Mapping (SLAM)

SLAM is a computational problem that enables a robot to build a map of an unknown environment while simultaneously keeping track of its own location within that map. This is a fundamental capability for robots that need to navigate and operate autonomously in complex, dynamic spaces.

Augmented Reality (AR) and Virtual Reality (VR) Integration

While not directly “robot visualization” in the sense of the robot’s internal perception, AR and VR play a crucial role in how humans interact with and direct robots. For example, a technician might use AR glasses to see overlaid data about a robot’s status or to guide its actions remotely. This symbiotic relationship enhances the overall effectiveness of robotic systems.

Applications: Where Robot Visualization is Making an Impact

The implications of robots being able to visualize and understand their environment are vast and are already reshaping numerous industries. The potential for increased efficiency, safety, and new service offerings is immense.

Autonomous Vehicles

This is perhaps the most prominent application. Self-driving cars rely heavily on advanced visualization to perceive pedestrians, other vehicles, road signs, and lane markings. Sophisticated algorithms interpret this data to make split-second decisions, ensuring safe navigation. The ability to predict the movement of other road users is a direct result of this advanced visual phase.

Robotics in Healthcare

Surgical robots, powered by high-definition cameras and advanced visualization, provide surgeons with enhanced precision and control. Robots are also being developed for patient care, assisting with tasks like medication delivery and patient monitoring, requiring them to navigate hospital corridors and recognize individuals.

Warehouse and Logistics Automation

Robots in warehouses can now identify, pick, and sort packages with incredible accuracy, even in cluttered environments. Advanced visualization allows them to navigate complex shelving systems, avoid obstacles, and optimize their routes, significantly boosting efficiency.

Inspection and Maintenance

Drones equipped with advanced cameras can inspect hard-to-reach infrastructure like bridges, wind turbines, and pipelines. Their ability to visualize defects, measure dimensions, and create detailed reports is invaluable for preventative maintenance and safety.

Consumer Robotics

From robotic vacuum cleaners that map your home to companion robots that recognize faces and respond to gestures, consumer robots are becoming increasingly sophisticated. Their ability to understand their surroundings allows for more intuitive and helpful interactions.

The Future of Robot Perception: What’s Next?

The current advancements are just the beginning. The field of robot visualization is evolving at an exponential pace, with researchers pushing the boundaries of what’s possible.

Enhanced Scene Understanding and Prediction

Future robots will move beyond simply identifying objects to understanding the context and predicting future events. This means a robot might not just see a ball, but anticipate that a child is about to throw it and react accordingly. This level of predictive understanding is crucial for truly seamless human-robot collaboration.

Multi-Modal Fusion

Combining visual data with other sensory inputs – such as audio, touch, and even smell (in the future) – will create a richer, more comprehensive understanding of the environment for robots. This multi-modal fusion will lead to more robust and adaptable robotic systems.

Ethical Considerations and Safety

As robots become more capable of perceiving and interacting with the world, ethical considerations surrounding their deployment become increasingly important. Ensuring safety, privacy, and accountability will be paramount as we integrate these advanced systems into our daily lives.

The journey of robots visualizing phase is a testament to human ingenuity. It’s a field that promises to unlock new possibilities, enhance our lives, and redefine our relationship with technology. The world is becoming a more visually intelligent place, one robot at a time.

Frequently Asked Questions about Robot Visualization

- What is the primary goal of robot visualization?

- The primary goal is to enable robots to perceive, interpret, and understand their environment in a way that allows them to perform tasks safely and effectively.

- How does AI contribute to robot visualization?

- AI, particularly machine learning and deep learning, powers the algorithms that allow robots to recognize objects, understand scenes, and make decisions based on visual input.

- What are some key technologies used in robot visualization?

- Key technologies include cameras (RGB, depth), LiDAR, IMUs, AI algorithms, and SLAM.

- What are the future trends in robot visualization?

- Future trends include enhanced scene understanding, predictive capabilities, and multi-modal sensory fusion.

The rapid advancements in robot visualization are not just technological marvels; they are the building blocks for a future where intelligent machines seamlessly integrate into our society, enhancing our capabilities and opening up avenues for progress we are only beginning to imagine.

Dive deeper into the world of robotics and AI!

Ready to see the future unfold? Explore more about AI’s impact on our world and discover how these incredible technologies are shaping our tomorrow.