AI Progress: How to Spark Innovation Without Fear

In the whirlwind of technological advancement, few areas capture our imagination, and sometimes our apprehension, quite like artificial intelligence. We stand at the precipice of unprecedented breakthroughs, yet a critical question looms: how do we ensure sustained AI progress without stifling the very innovation that drives it? It’s a delicate dance between pushing boundaries and establishing necessary safeguards, a balance that will define our digital future.

The Relentless March of AI Progress

Artificial intelligence is not just a buzzword; it’s a transformative force reshaping industries, economies, and daily life. From medical diagnostics to autonomous systems, the capabilities of modern AI are expanding at an astonishing rate. This relentless forward momentum promises solutions to some of humanity’s most complex challenges, offering a glimpse into a world of enhanced efficiency, discovery, and convenience.

However, with great power comes great responsibility. The rapid pace of development often outstrips our ability to fully understand its implications, leading to legitimate concerns about ethical use, societal impact, and potential risks. This is where the conversation around regulation inevitably enters the fray.

Regulation: Fueling or Fearing AI Innovation?

The push for regulation in the AI space is understandable. Ensuring fairness, privacy, and accountability are paramount as AI systems become more integrated into critical infrastructure. Yet, there’s a palpable tension: could an overly cautious or poorly structured regulatory environment inadvertently put the brakes on vital AI progress?

The Argument for Responsible AI Regulation

Advocates for regulation highlight several crucial reasons why oversight is not just beneficial, but essential:

- Ensuring Ethical AI: Guidelines can prevent bias in algorithms, protect user data, and mandate transparency in decision-making processes.

- Mitigating Risks: From autonomous vehicle safety to the responsible deployment of AI in warfare, regulation can establish guardrails against misuse and unintended harm.

- Building Public Trust: Clear rules can foster confidence among the public, encouraging broader acceptance and adoption of AI technologies.

- Promoting Fair Competition: Regulation can prevent monopolies and ensure a level playing field for all innovators.

Without a framework, the potential for significant societal disruption, ethical dilemmas, and even catastrophic failures increases. Organizations like Stanford’s Human-Centered AI are actively researching these critical intersections.

When Oversight Becomes a Barrier to Advancement

On the flip side, innovators often voice concerns that excessive or premature regulation can stifle creativity and slow down the very technological advancement we hope to achieve. This fear isn’t unfounded; overly prescriptive rules can:

- Increase Development Costs: Compliance can become an expensive, time-consuming burden, especially for smaller startups.

- Limit Experimentation: The iterative nature of AI development requires freedom to test, fail, and learn. Rigid rules can make this difficult.

- Create Regulatory Lag: Technology evolves far faster than legislation. Rules designed for yesterday’s AI might be irrelevant or detrimental to tomorrow’s innovations.

- Drive Innovation Offshore: Companies might choose to develop in regions with less stringent regulations, potentially leading to a “race to the bottom” or a loss of domestic talent.

The core challenge lies in finding a sweet spot where necessary safeguards are in place without inadvertently penalizing the bold steps required for true AI progress.

Fostering Fearless AI Innovation Through Smart Governance

The path forward requires a nuanced approach. Instead of an ‘either/or’ mentality, we need to cultivate an ecosystem where innovation thrives alongside intelligent governance.

Dialogue and Collaboration are Key

Policymakers must engage directly with researchers, developers, and industry leaders to understand the technology’s nuances. Conversely, innovators need to proactively participate in shaping policy, sharing insights into both potential and pitfalls. This collaborative spirit can lead to regulation that is informed, effective, and forward-looking.

Designing Agile Regulatory Frameworks

Given AI’s rapid evolution, static, one-size-fits-all regulations are unlikely to succeed. Instead, we need agile frameworks that can adapt. This might include:

- Principle-Based Guidelines: Focusing on broad ethical principles rather than rigid technical specifications.

- Regulatory Sandboxes: Controlled environments where innovators can test new AI applications under relaxed regulations, with close monitoring.

- Continuous Review: Mechanisms for regularly updating and refining policies as the technology matures and new challenges emerge.

The Future of AI Progress: A Balanced Horizon

The future of AI progress depends on our collective ability to navigate this complex landscape. By fostering open dialogue, embracing adaptive governance, and prioritizing both innovation and responsibility, we can unlock AI’s immense potential. This isn’t about choosing between progress and safety; it’s about intelligently integrating both to build a future where AI serves humanity’s best interests without unnecessary fear.

The journey ahead demands foresight, courage, and a commitment to collaborative problem-solving. Let’s ensure that the steps we take today pave the way for a generation of AI that is not only powerful but also profoundly beneficial.

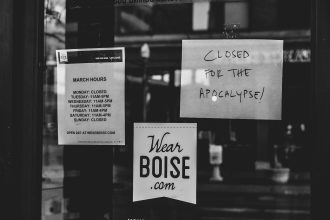

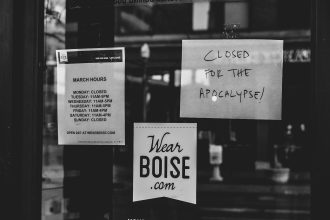

Featured image provided by Pexels — photo by Tara Winstead